Ads in ChatGPT Might Fund Innovation & Bills

But They Could Also Redefine, If not Erode Trust

At OpenAI’s scale, funding isn’t optional.

It’s existential.

That reality is now colliding with something far more fragile than infrastructure or compute budgets: T-R-U-S-T

Over the past few weeks, the tech spotlight has been fixed on reports that advertising may eventually arrive inside ChatGPT. I posted a brief note on LinkedIn, laid out the mechanics and guardrails being considered, and on paper, much of it looks reasonable

.But stepping back, this moment deserves more scrutiny than a typical New Ad Format debate.

Because this isn’t just another Ad model, and the stakes are categorically different.

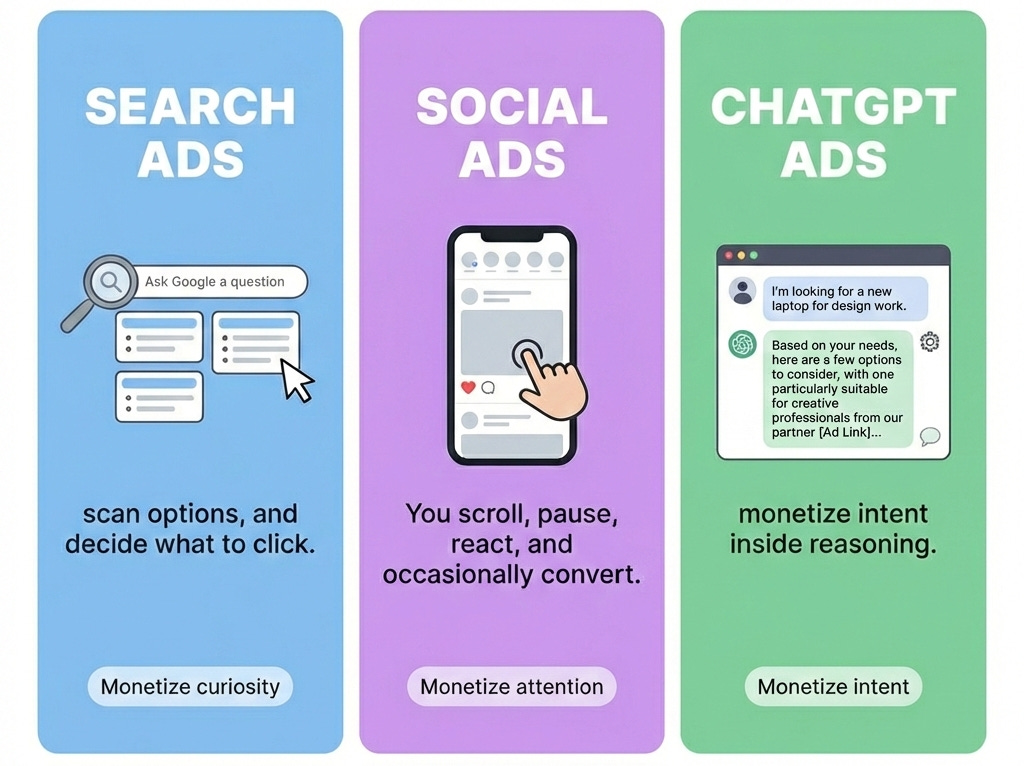

ChatGPT Ads Aren’t Like Search or Social

To understand what’s at risk, we need to be precise about what would actually be monetized.

That distinction changes everything.

When someone asks ChatGPT how to fix a problem, select a tool, structure a strategy, or make a decision, they aren’t browsing.

They are delegating thinking.

ChatGPT isn’t adjacent to the decision.

It is shaping the frame within which the decision is made.

That level of influence is unprecedented in consumer technology.

Power & Risk of Cognitive Delegation

ChatGPT’s value isn’t just that it answers questions.

It’s that it allows people to

Externalize thinking

Compress complexity

Move faster without fully re-deriving context

Trust outputs enough to act on them

This is why people embed it into workflows, build businesses on top of it, and rely on shared outputs without constant verification.

That trust is not incidental.

It is the product.

And advertising touches it directly.

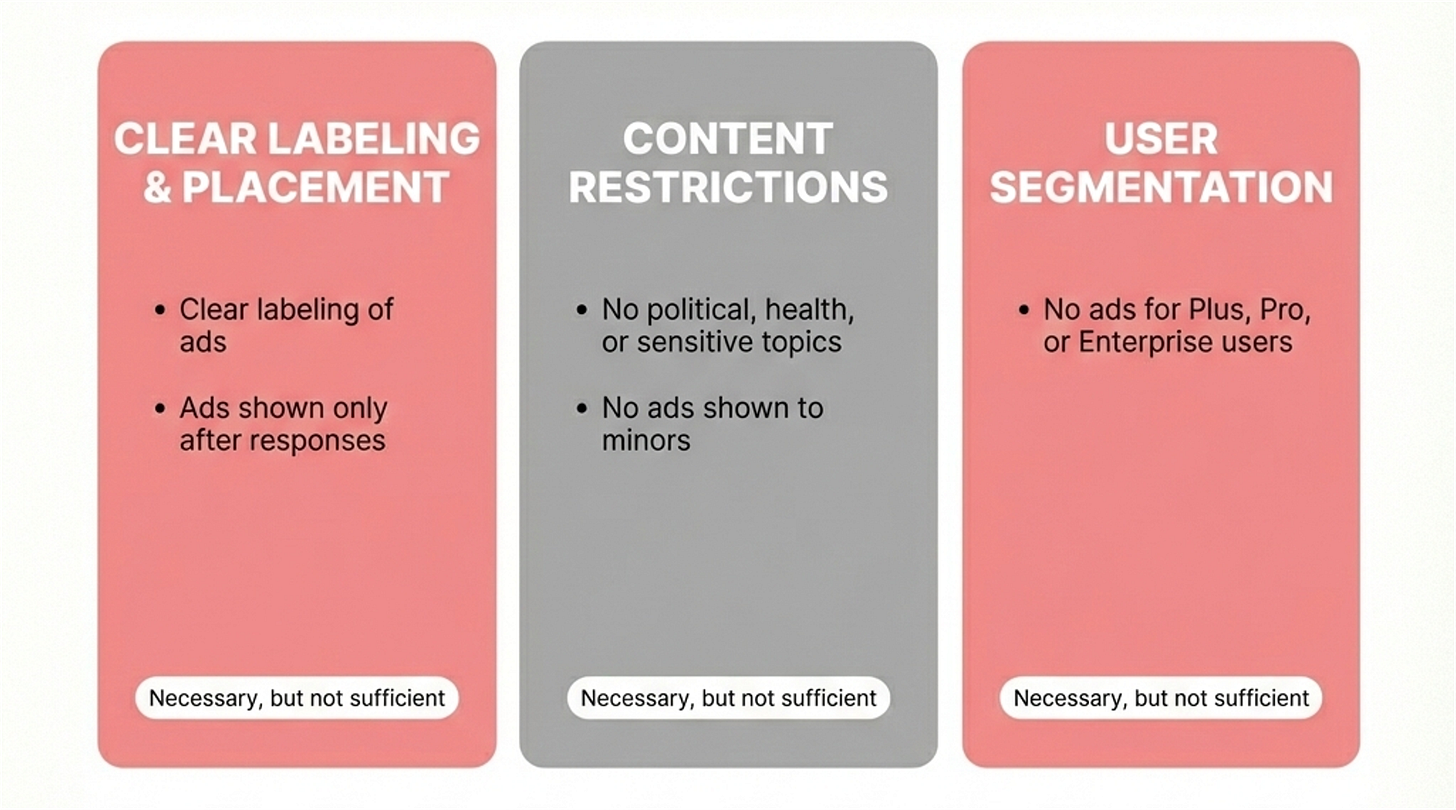

Guardrails - Necessary, But Not Sufficient

To OpenAI’s credit, the proposed constraints look serious

Clear labelling of ads

Ads shown only after responses

No political, health, or sensitive topics

No ads shown to minors

No ads for Plus, Pro, or Enterprise users

Are these necessary?

Yes.

Are they sufficient?

Not yet.

Because the real risk isn’t blatant manipulation or obvious shilling.

It’s something quieter.

Slow Creep of Entanglement

Every dominant ad system in history followed a similar arc:

Strong principles at launch

Rapid scale

Rising infrastructure costs

Increasing revenue pressure

At some point, the internal question subtly shifts from Should we?” to Why not just this once?

No single decision breaks the system.

But accumulation does.

What makes ChatGPT different from every platform before it is the depth of trust users grant it.

Once ads blur, even slightly, the line between reasoning and promotion, trust doesn’t collapse overnight.

It erodes in 1-2-3 stages

Confidence

Reliance

Legitimacy

And by the time the erosion is visible, it’s already entrenched.

The Real Design Principle That Matters

This debate is often framed as a UI problem.

It isn’t.

This is a system-level design problem.

Ads shouldn’t just be labelled. They must be structurally separated from cognition itself.

Not adjacent to reasoning.

Not shaping the framing.

Not influencing which options are surfaced first.

The moment users begin to wonder:

Is this answer optimised for truth — or for yield?

ChatGPT stops being an assistant.

And starts being an intermediary.

That transition is subtle, but profound.

Innovation Needs Funding & Funding Needs Trust

There’s no denying the reality:

Advanced AI is expensive.

Breakthroughs require capital.

Ads may well fund the next leap forward.

But trust is what allows those breakthroughs to matter.

Without trust

Outputs get second-guessed

Workflows fracture

Businesses hedge

Users disengage quietly

And quiet disengagement is far more dangerous than public backlash.

Outrage can be addressed.

Silence is harder to recover from.

The Standard That Gets Set Now Will Last

If OpenAI gets this right, it won’t just unlock a revenue stream.

It will set a new global standard for how AI systems can be monetized without corrupting their core purpose.

If it gets it wrong, the damage won’t be dramatic.

There won’t be a scandal or a single breaking moment.

There will just be a slow, collective pulling back.

And in systems built on trust, that’s the hardest thing to rebuild.

Ads may fund innovation.

But trust is what allows innovation to compound.

This moment isn’t about whether ChatGPT should have ads.

It’s about whether intelligence itself can be monetized

without being compromised.

That’s a much higher bar.

And it’s one worth holding.